UnSAMv2: Self-Supervised Learning Enables Segment Anything at Any Granularity

The Segment Anything Model (SAM) family has become a widely adopted vision foundation model, but its ability to control segmentation granularity remains limited...

Abstract

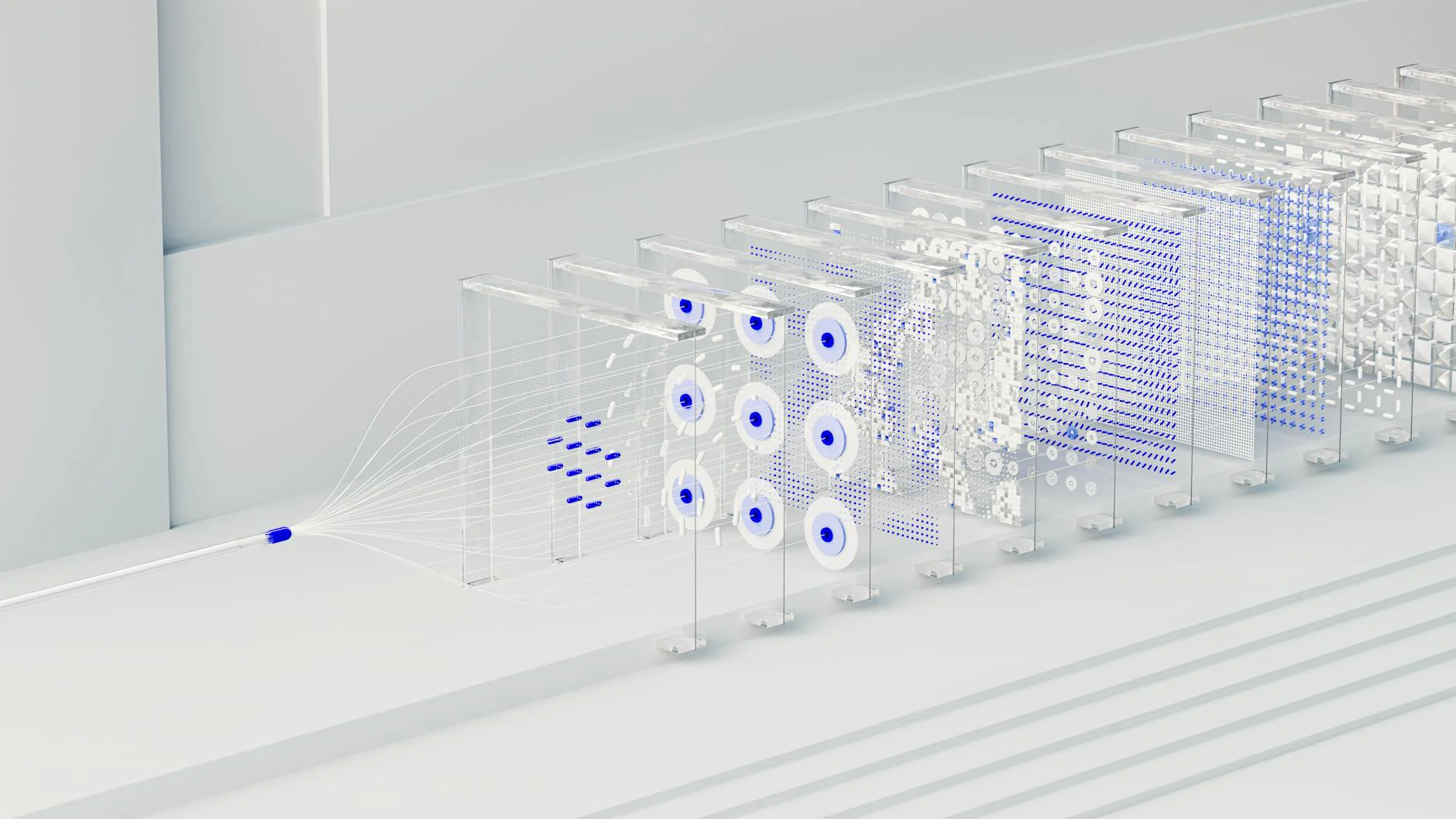

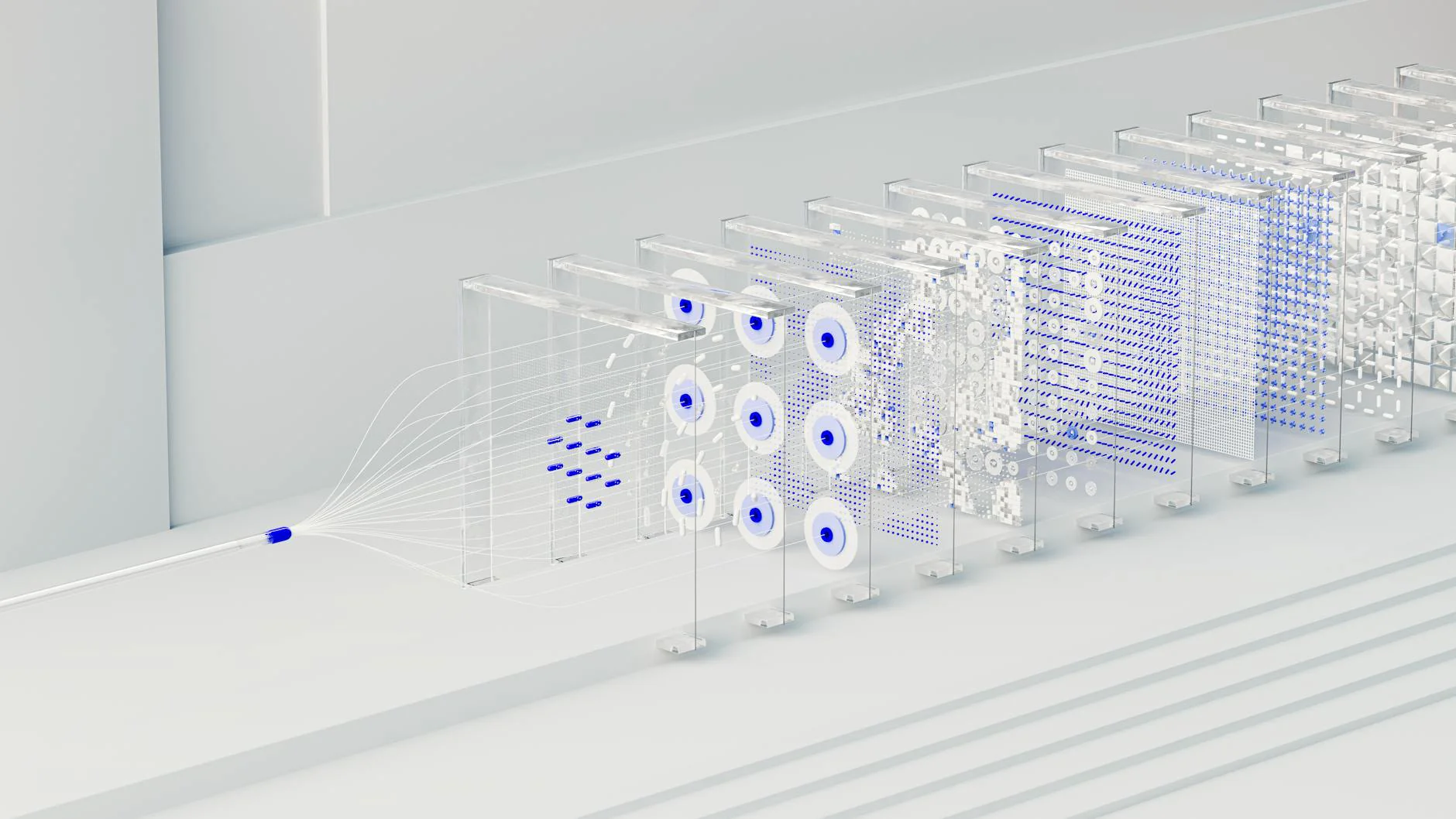

The Segment Anything Model (SAM) family has become a widely adopted vision foundation model, but its ability to control segmentation granularity remains limited. Users often need to refine results manually - by adding more prompts or selecting from pre-generated masks - to achieve the desired level of detail. This process can be ambiguous, as the same prompt may correspond to several plausible masks, and collecting dense annotations across all granularities is prohibitively expensive, making supervised solutions infeasible. To address this limitation, we introduce UnSAMv2, which enables segment anything at any granularity without human annotations. UnSAMv2 extends the divide-and-conquer strategy of UnSAM by discovering abundant mask-granularity pairs and introducing a novel granularity control embedding that enables precise, continuous control over segmentation scale. Remarkably, with only $6$K unlabeled images and $0.02%$ additional parameters, UnSAMv2 substantially enhances SAM-2, achieving segment anything at any granularity across interactive, whole-image, and video segmentation tasks. Evaluated on over $11$ benchmarks, UnSAMv2 improves $\text{NoC}{90}$ (5.69 $\rightarrow$ 4.75), 1-IoU (58.0 $\rightarrow$ 73.1), and $\text{AR}{1000}$ (49.6 $\rightarrow$ 68.3), showing that small amounts of unlabeled data with a granularity-aware self-supervised learning method can unlock the potential of vision foundation models.

Access Full Paper

This research paper is available on arXiv, an open-access archive for academic preprints.

Citation

Junwei Yu. "UnSAMv2: Self-Supervised Learning Enables Segment Anything at Any Granularity." arXiv preprint. 2025-11-17. http://arxiv.org/abs/2511.13714v1

About arXiv

arXiv is a free distribution service and open-access archive for scholarly articles in physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering, systems science, and economics.

References

- [1]ResearchCredibility: 9/10Junwei Yu. "UnSAMv2: Self-Supervised Learning Enables Segment Anything at Any Granularity." arXiv.org. November 17, 2025. Accessed November 18, 2025.

Transparency Notice: This article may contain AI-assisted content. All citations link to verified sources. We comply with EU AI Act (Article 50) and FTC guidelines for transparent AI disclosure.