Quantum Machine Learning via Contrastive Training

Quantum machine learning (QML) has attracted growing interest with the rapid parallel advances in large-scale classical machine learning and quantum technologie...

Abstract

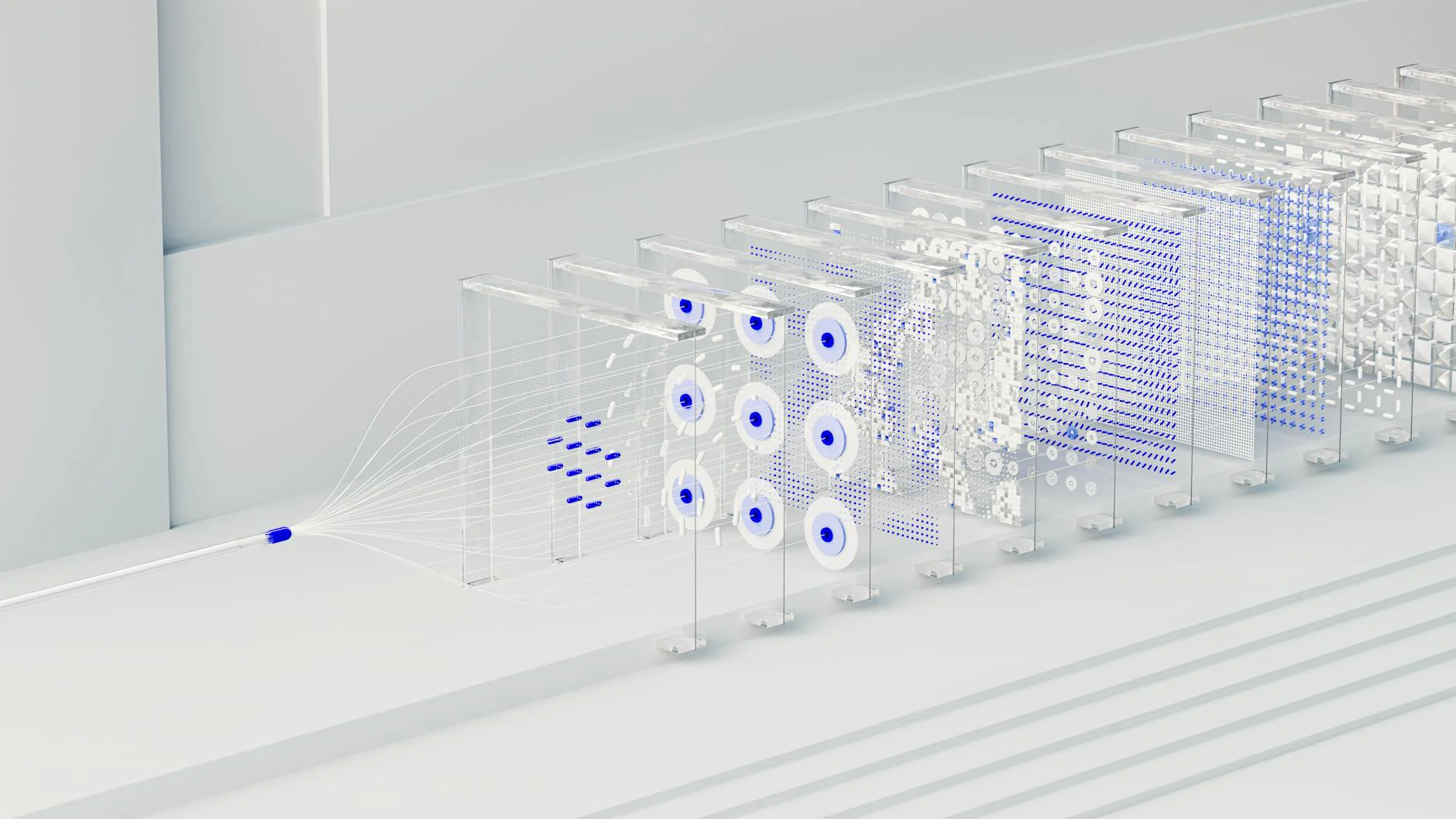

Quantum machine learning (QML) has attracted growing interest with the rapid parallel advances in large-scale classical machine learning and quantum technologies. Similar to classical machine learning, QML models also face challenges arising from the scarcity of labeled data, particularly as their scale and complexity increase. Here, we introduce self-supervised pretraining of quantum representations that reduces reliance on labeled data by learning invariances from unlabeled examples. We implement this paradigm on a programmable trapped-ion quantum computer, encoding images as quantum states. In situ contrastive pretraining on hardware yields a representation that, when fine-tuned, classifies image families with higher mean test accuracy and lower run-to-run variability than models trained from random initialization. Performance improvement is especially significant in regimes with limited labeled training data. We show that the learned invariances generalize beyond the pretraining image samples. Unlike prior work, our pipeline derives similarity from measured quantum overlaps and executes all training and classification stages on hardware. These results establish a label-efficient route to quantum representation learning, with direct relevance to quantum-native datasets and a clear path to larger classical inputs.

Access Full Paper

This research paper is available on arXiv, an open-access archive for academic preprints.

Citation

Liudmila A. Zhukas. "Quantum Machine Learning via Contrastive Training." arXiv preprint. 2025-11-17. http://arxiv.org/abs/2511.13497v1

About arXiv

arXiv is a free distribution service and open-access archive for scholarly articles in physics, mathematics, computer science, quantitative biology, quantitative finance, statistics, electrical engineering, systems science, and economics.

References

- [1]ResearchCredibility: 9/10Liudmila A. Zhukas. "Quantum Machine Learning via Contrastive Training." arXiv.org. November 17, 2025. Accessed November 18, 2025.

Transparency Notice: This article may contain AI-assisted content. All citations link to verified sources. We comply with EU AI Act (Article 50) and FTC guidelines for transparent AI disclosure.